Google Reading Mode app expanding its support to more social media and email apps

In 2020, Google released its Reading Mode app, and now the company has made the Reading Mode app plenty useful. With this new update, the Reading Mode app will also work in Gmail, X (formerly Twitter), and Threads along with other social media and email apps as well.

If we talk about what this app can do, then this app makes every page text easy, so that you can easily read that along with this it also turns each viewed page into plain text. As mentioned above, this new update brings Reading Mode app support to Gmail, X, Threads, and other email and social media apps which is a good and useful thing.

Furthermore, if you are on the go, then the app can also read the text aloud. So, that you can listen to it. Still, the developers have to note that the app may not work properly with all social media and email apps. However, it might work with all social media and email apps in the future.

Do you guys use this Reading Mode app? Do let us know in the comment box. Also, do tell us whether you like this post or not.

For More Such Updates Follow Us On – Telegram, Twitter, Google News, WhatsApp and Facebook

Google is Developing Gemini for Headphones

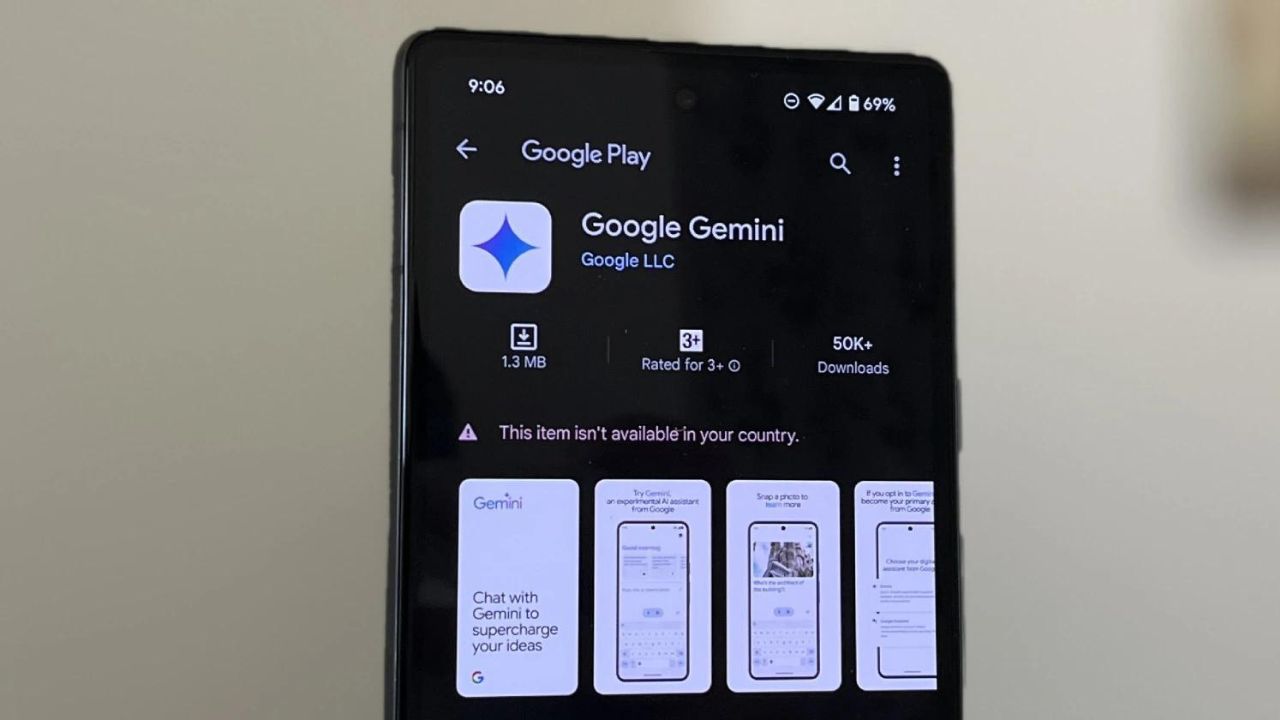

Google has recently renamed its AI Assistant to Gemini previously Bard. The Gemini app is already available in the U.S., Canada, Asia Pacific, Latin America, and Africa. This app will replace your Google Assistant as well in both Android and iOS.

Note: The Gemini app is expected to be launched in India by next week.

Some reports also suggested that Gemini’s mobile app is working on expanding availability to make it accessible on your headphones very soon. 9to5Google has recently appraised the beta version of the Google app (15.6) that contained the message which is listed below:

Gemini mobile app is working on expanding availability to make it accessible on your headphones

Few headphones have a button or a gesture from which we can activate voice assistant. Currently, it is Google Assistant, even if Gemini replaces it on the smartphone. For example, Google’s Pixel Buds Pro till now working with the old service, as indicated by its voice as well as capabilities, as per source.

Primarily, Google is focusing on launching the Gemini AI app for smartphones in all regions, especially in Europe. When Gemini expands to audio wearables, then users can expect features such as customizing the playback speed and shorter answers.

What do you guys think about the Gemini phone app? Do let us know in the comment box. Also, do let us whether you like this post or not.

Featured Image from piunikaweb.com

For More Such Updates Follow Us On – Telegram, Twitter, Google News, WhatsApp and Facebook

Everything you need to know about Google One new AI Premium Plan

Sundar Pichai, CEO of Google and Alphabet has posted a thread on their X account. In that thread, Pichai mentioned that Google One (a subscription from Google) has crossed 100 Million Subscribers, and Google will be obsessing about” building on that momentum” along with the newly launched AI Premium Plan.

Features you get after subscribing to Google One AI Premium Plan

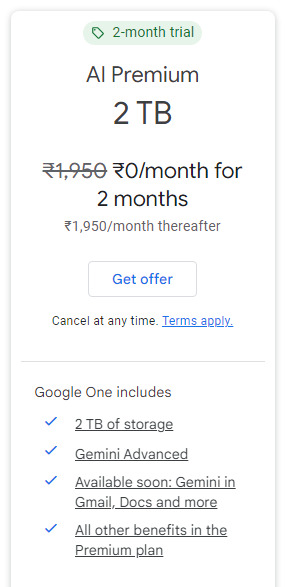

This new AI Premium Plan will offer AI features that are powered by recently powered Google’s AI Model (Gemini), which was previously (Bard). If we talk about what this plan will offer, then this Google One AI Premium Plan will feature Gemini Advanced.

This Gemini is also coming to Gmail, Google Docs, and other Google apps as well very soon. Moreover, this plan will offer 2TB of storage, 10% back from the Google Store, More Google Meet features such as longer group video calls (the limit without a subscription is one hour), and More Google Calendar features like enhanced appointment scheduling.

Pricing of Google One AI Premium Plan

Later on, if we talking the pricing of the AI Premium Plan, then this Google One AI Premium Plan is priced at Rs. 1,950/ $23.49 for a month. On the other hand, the other Google One plans i.e. Basic, Standard cost Rs.130/ $1.57, Rs.650, $7.83. You can learn more about Google One Plans by clicking here.

Note: Currently, Google One AI Premium is available free for 2 months.

Do you guys purchase this new Google One AI Premium Plan? Do let us know in the comment box. Also, tell us whether you like this post or not.

For More Such Updates Follow Us On – Telegram, Twitter, Google News, WhatsApp and Facebook

Google Pixels are receiving the February update

Since last year, Google has stopped rolling out new updates on the first Monday of every month for its Google Pixel Series. But tomorrow i.e. Monday, Google, has rolled out a new update.

The supported Google Pixel Phones have started receiving new updates via over-the-air (OTA). If you are the one who is not getting February, then you have to wait for some time. After installing this new update, the software version will be UQ1A.240205.002 on all global models excluding Google Pixel 8 and Google Pixel 8 Pro which get UQ1A.240205.004.

The SoftBank Japan all models get UQ1A.240205.002.B1 excluding the – say it with us – Google Pixel 8 and Google Pixel 8 Pro which have UQ1A.240205.004.B1. At last, if we talk about the Verizon models, then they will get UQ1A.240205.002.A1 excluding Google Pixel 8 and 8 Pro, which have UQ1A.240205.004.A1.

What are the Improvements?

This February update in Google Pixel 8 series, i.e. Google Pixel 8 and Google 8 Pro comes with general improvements for the system’s stability and performance. Also, this update fixes the issue in which the display gets corrupted in some conditions. This update also improves the general Wi-Fi stability and performance in the Google Pixel 8 Series (8 and 8 Pro).

If we talk about Google Pixel Fold, then this update also includes a fix for the issue with the outer display. Furthermore, the February update in the Pixels also includes a fix for stability and performance issues with some third-party apps.

Do you guys receive this February update on your Pixel? Do let us know in the comment box. Also, tell us whether you like this post or not.

For More Such Updates Follow Us On – Telegram, Twitter, Google News, WhatsApp and Facebook